Tech Journal The Human Factor: Best Practices for Responsible AI

By Ken Seier / 22 Sep 2020 / Topics: Artificial Intelligence (AI) , Featured , Digital transformation , Analytics

Six essential strategies for the decision-makers and developers behind the model

Over the past few years, the democratization of Artificial Intelligence (AI) has fundamentally begun to alter the way we identify and resolve everyday challenges — from conversational agents for customer service to advanced computer vision models designed to help detect early signs of disease.

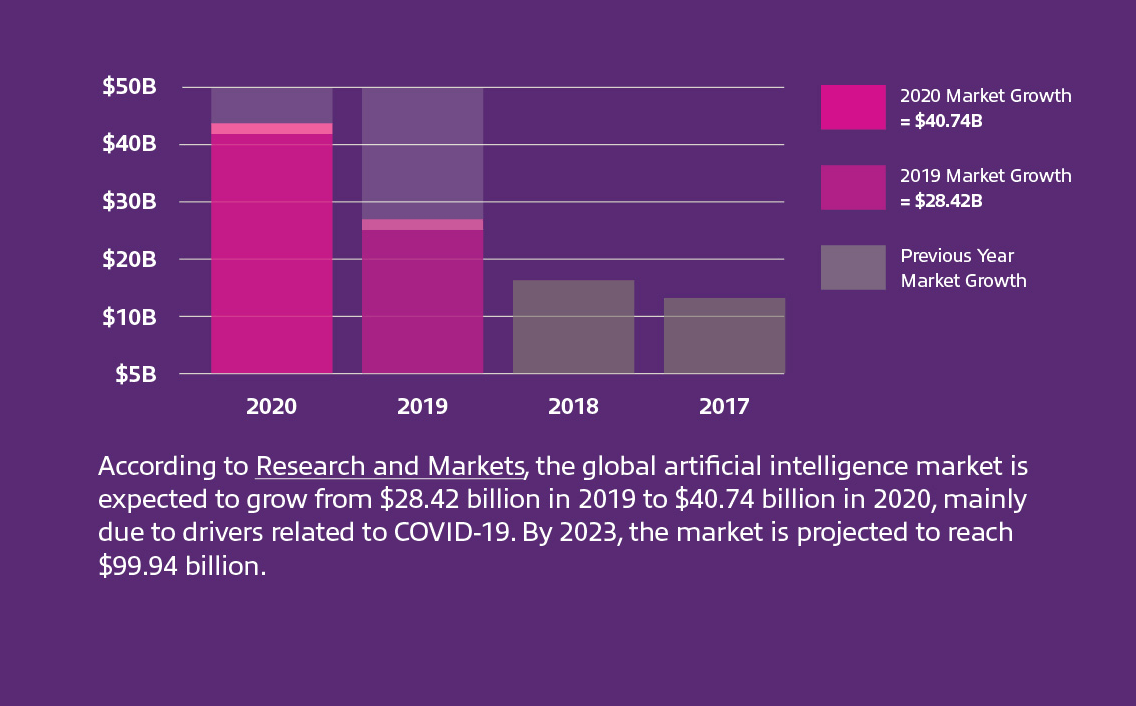

In 2020, the ongoing need to protect public health while maintaining a critical edge in an uncertain market has only accelerated investments in AI. Solutions such as facial recognition for contactless entry, computer vision for mask detection and apps for contact tracing are enabling businesses to reopen to the public more safely and strategically. But unlike a traditional technology implementation, there are some additional social, legal and ethical challenges to consider when developing and implementing these technologies.

So, how do we design innovative, intelligent systems that solve complex human challenges without sacrificing core values like privacy, security and human empathy?

It starts with a commitment from everyone involved to ensure even the most basic AI models are developed and managed responsibly.

A few misconceptions about AI

While the potential value of modern AI solutions can’t be understated, artificial intelligence is still in its infancy as a technology solution (think somewhere along the lines of the automobile back in the 1890s). As a result, there remain some fundamental public misconceptions around what AI is and what it’s capable of. Gaining a better understanding of the capabilities and limitations of this technology is key to informing responsible, effective decisions.

The term “AI” is often associated with a broad human-like intelligence capable of making detailed inferences and connections independently. This is known as general or “strong” AI — and at this point, it’s confined to the realms of science fiction. By comparison, the capabilities of modern machine learning models are fundamentally limited, known as narrow or “weak” AI. These models are pre-programmed to accomplish very specific tasks but are incapable of any broader learning.

Cameras used to detect masks or scan temperatures, for example, might leverage some form of facial identification to recognize the presence of a human face. But unless explicitly trained to do so, a computer vision system won’t have the ability to identify or store the distinct facial features of any individual.

Even within a limited scope of design, AI isn’t infallible. While total accuracy is always the goal, systems are only as good as their training. Even the most carefully programed models are fundamentally brittle and can be thrown off by anomalies or outliers. Algorithms may also grow less accurate over time as circumstances or data inputs change, so solutions need to be routinely re-evaluated to make sure they’re continuing to perform as expected.

The effectiveness of the model, the amount of personal information gathered, when and where data is stored, and how often the system is tuned depends on the organization’s decision-makers and developers.

This is where the principles of responsible AI come into play.

Responsible AI practices

Responsible AI refers to a general set of best practices, frameworks and tools for promoting transparency, accountability and the ethical use of AI technologies.

All major AI providers use a similar framework to guide their decision-making — and there’s a wealth of scholarly research on the topic — but in general, guidelines focus on a few core concepts.

Start with the tough questions.

- Before your organization even begins researching AI solutions, it’s important to consider what’s driving your initiative. Could the same outcome be achieved using a “lower tech” solution? If not, you’ll need to take a hard look at the risks associated with your particular application.

- What harm may be caused, not just with the intended use case, but also if the model were to be applied in a slightly different way? Identifying potential issues ahead of time will allow your team to safeguard against misuse.

Establish robust governance.

- Once you’ve committed to an AI initiative, you’ll need to determine who will own the commitment to responsible AI at the highest level. This is usually a CEO or a small interdisciplinary group made up of both technical and non-technical roles, ideally with some representation from a statistics background. This knowledge is critical to setting patterns and processes that ensure the accuracy and ongoing relevancy of the model.

Responsible AI refers to a general set of best practices, frameworks and tools for promoting transparency, accountability and the ethical use of AI technologies.

- Your governance team will need to establish a system of accountability to guide decision-making and prevent the prioritization of cost over integrity. The planning process should include documentation of potential flaws, as well as a plan for when the program becomes “out of tune” or fails. How will accuracy be measured over time? What are the implications of a bad model? What’s the process for updating or removing the system? This must be an iterative and ongoing conversation through and beyond deployment.

Commit to appropriate and secure use of data.

- Security should be factored into the development process from day one. Best practice in any realm of data collection is to gather only the minimum needed to accomplish a given task. Don’t retain any information, particularly Personally Identifiable Information (PII), that isn’t explicitly necessary downstream. The best way to secure it is to not store it at all.

- By processing data on the edge, many AI solutions can be designed to eliminate the need to transmit data unless a response is required. But regardless of infrastructure, you’ll need a robust strategy outlining where your data is going to live, how it will be encrypted and what the auditing process will be for compliance purposes.

Factor in the human loop.

- AI is at its best when it’s driving human decision-making — not replacing it. As a result, a fundamental understanding of human cognitive patterns is essential to creating an effective model.

- One of the challenges of the way humans interface with AI is over-reliance. When a system is 99% accurate, users will invariably come to trust the results over time, leading to misidentification of the 1% of mistakes that might have previously stood out. To avoid this, humans must play a regular role in the decision-making process, and measures must be taken to verify both the validity of the model and the person doing the monitoring. These types of controls will help maintain the integrity of the solution over time.

- Additionally, because AI often drives decisions which are often hard to refute or adjust, it’s critical that systems be designed with human fail safes. All AI processes that affect human outcomes must have an opt-out mechanism that allows a qualified individual to make an independent decision. The more important the decision, the more involved a human should be.

Beware of bias.

- Every culture has a set of inequitable values that over time become engrained in society, and subsequently reflected in the data that society collects. AI simply identifies patterns, which means that human prejudices in data often become embedded in the system. More and better data on a dominant subgroup leads to more accurate or emphasized results from an AI model. Fundamentally biased data leads to fundamentally biased results.

- Bias can also be introduced in the algorithm itself so it’s important that systems are thoughtfully designed. Decision-makers and developers must use the best of their human intelligence to safeguard against the inequitable application of any given model. The onus is on the organization to ask the right questions upfront and look for potential issues ahead of time, knowing that any bias that isn’t uncovered will be perpetuated.

Work toward Explainable AI (XAI).

- In order to have value as a decision-making tool, users need to be able to trust the system and its outputs. By its nature, AI is complex and can often be difficult to understand. Explainability and interpretability are key to ensuring stakeholders within and outside your organization are empowered to understand how and why your model has come to a given conclusion.

- Ensure you have documentation in place for a full range of audiences from data scientists to regulators to everyday consumers. Communicate early and openly with employees and customers about the capabilities of the technology, what data is being collected as well as how it’s being used and stored. This will help to build trust in your AI solution and the decisions being made by your leadership team.

Where intelligence meets empathy

Responsibly leveraged, the potential benefits of AI are near limitless, from improving business processes to fighting disease.

Regardless of application, everyone involved in the decision-making process has a role to play in developing something that contributes to the business and humanity as a whole in a positive way. When in doubt, take a strategic pause to step back and consider the broader implications of the questions your team faces and err on the side of caution.

Ultimately, no matter how advanced the technology, we have to be humans first.

See what it takes to implement responsible AI. Insight, Intel and Microsoft architects offer an inside look at best practices and ethical considerations at each stage of production.

About the author:

Related articles

Narrow your topic:

Artificial Intelligence (AI) Analytics Digital transformation Tech Journal View all focus areas